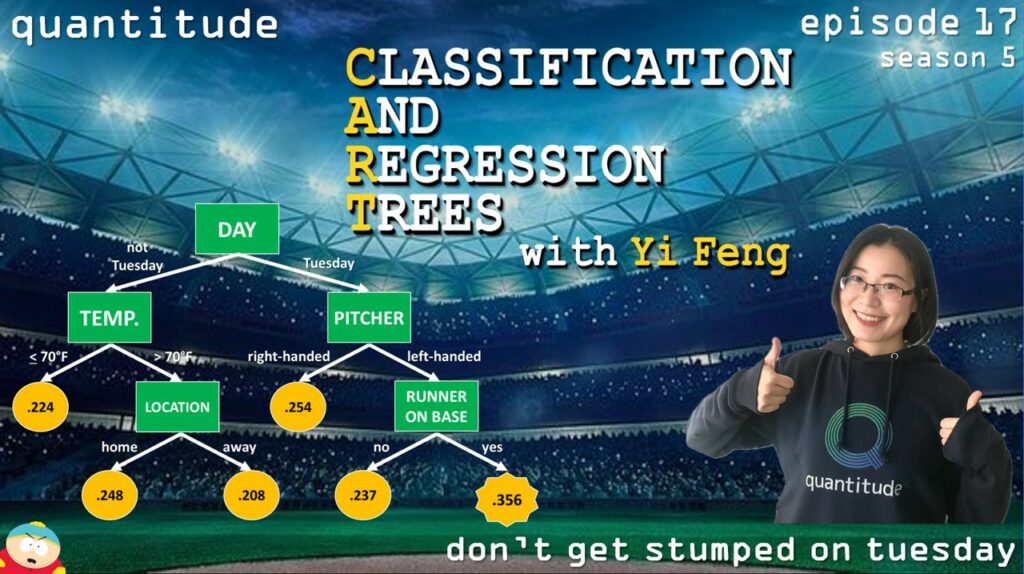

In this week’s episode Greg and Patrick are honored to visit with Yi Feng, a quantitative methodologist at UCLA, as she helps them understand classification and regression tree analysis. She describes the various ways in which these models can be used, and how these can serve to inform both prediction and explanation. Along the way they also discuss looking pensive, drunken 3-way interactions, Stephen Hawking, parlor tricks, Cartman, validation, dragon boats, anxiety, spam filters, hair loss, audio visualizations, overused tree analogies, rainbows & unicorns, rain in Los Angeles, and Moneyball.

Related Episodes

- S4E25: Cluster Analysis

- S3E20: The Rise of Machine Learning in the Social Sciences with Doug Steinley

- S3E19: Social Network Analysis with Tracy Sweet

- S1E20: Finite Mixture Modeling & Sir Mixture-A-Lot

Suggested Readings

Breiman, L. (2017). Classification and regression trees. Routledge.

Hastie, T., Tibshirani, R., Friedman, J. H., & Friedman, J. H. (2009). The elements of statistical learning: data mining, inference, and prediction (Vol. 2, pp. 1-758). New York: springer.

King, M. W., & Resick, P. A. (2014). Data mining in psychological treatment research: a primer on classification and regression trees. Journal of Consulting and Clinical Psychology, 82, 895.

Loh, W. Y. (2011). Classification and regression trees. Wiley interdisciplinary reviews: Data mining and knowledge discovery, 1, 14-23.

Shmueli, G. (2010). To explain or to predict?. Statistical Science, 25, 289-310.

Strobl, C., Malley, J., & Tutz, G. (2009). An introduction to recursive partitioning: rationale, application, and characteristics of classification and regression trees, bagging, and random forests. Psychological Methods, 14, 323.

Sutton, C. D. (2005). Classification and regression trees, bagging, and boosting. Handbook of Statistics, 24, 303-329.